Base Definition

“In the context of neural networks, a perceptron is an artificial neuron using the Heaviside step function as the activation function”

This Definition is correct but there are a lot of things which require clarification like what is an Artificial Neuron? What is a Step Function? What are Activation Functions?

Hence, Before Understanding the what is a Perceptron and learn its true essence from where it came to be, We need to learn from where did Artificial Intelligence gets it roots from and that is Neuron.

The Biological Inspiration of Artificial Neural Networks

Neurons in your brain are connected via Axons. The neuron “fires” to the neurons it’s connected to, when enough of its input signals are activated.

When many of these neurons combine together to form a Complex Network they yield Learning Behaviour.

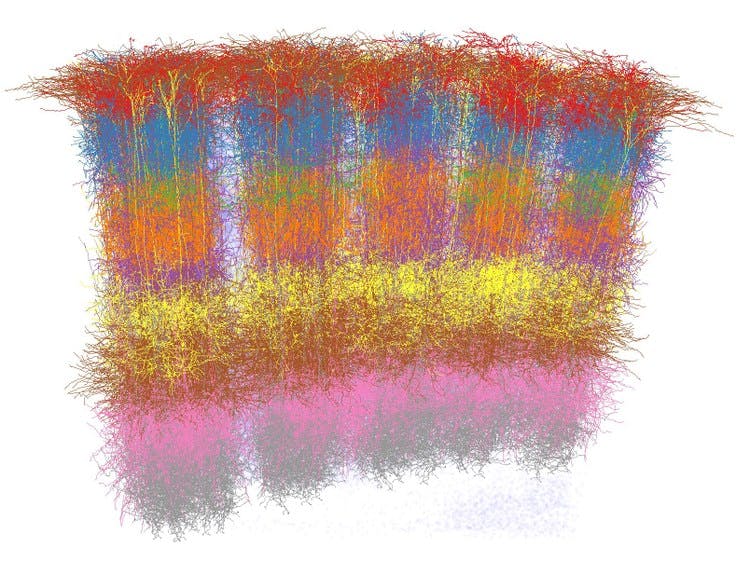

Moreover Neurons in your brain seem to be Arranged into many stacks or Columns that Process Information in Parallel.

[Image 1] - Signifying the Stacks of Neurons in a brain which allows parallel Processing(Cortical Columns)

[Image 1] - Signifying the Stacks of Neurons in a brain which allows parallel Processing(Cortical Columns)

Credits - Marcel Oberlaender et al.(Google Images)

The First Artificial Neurons

In 1943 Researchers when Trying to Replicate the basic building of a brain, Proposed this Simple Architecture for an Artificial Neuron

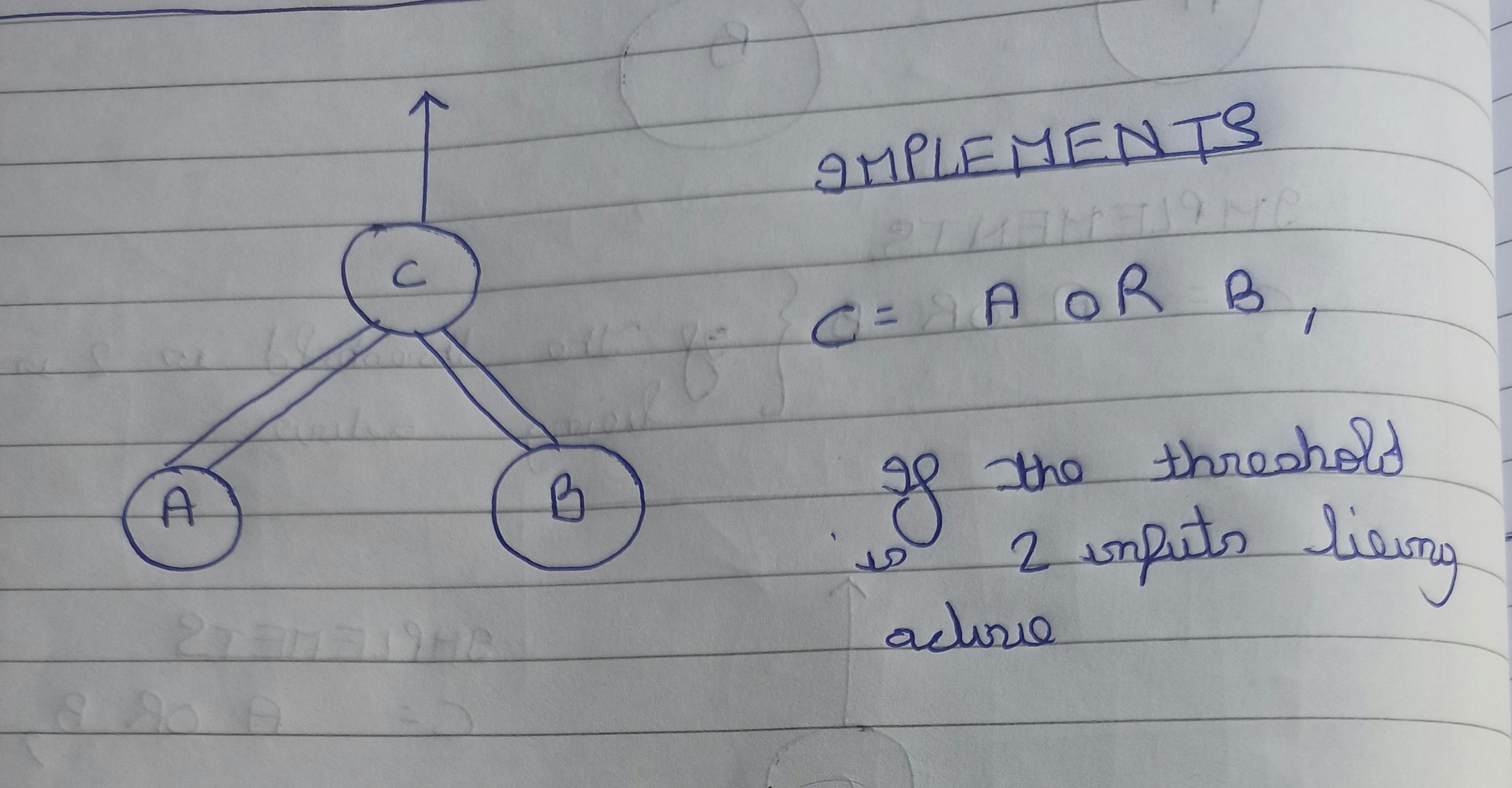

"Depending on the Number of Connections coming to each neuron and on whether each connection Activates or suppresses each neuron(Works the same way in nature as well). We can Replicate different logical operations"

[Image 2] - Construction of a OR Logical Gate through an Artificial Neuron

[Image 2] - Construction of a OR Logical Gate through an Artificial Neuron

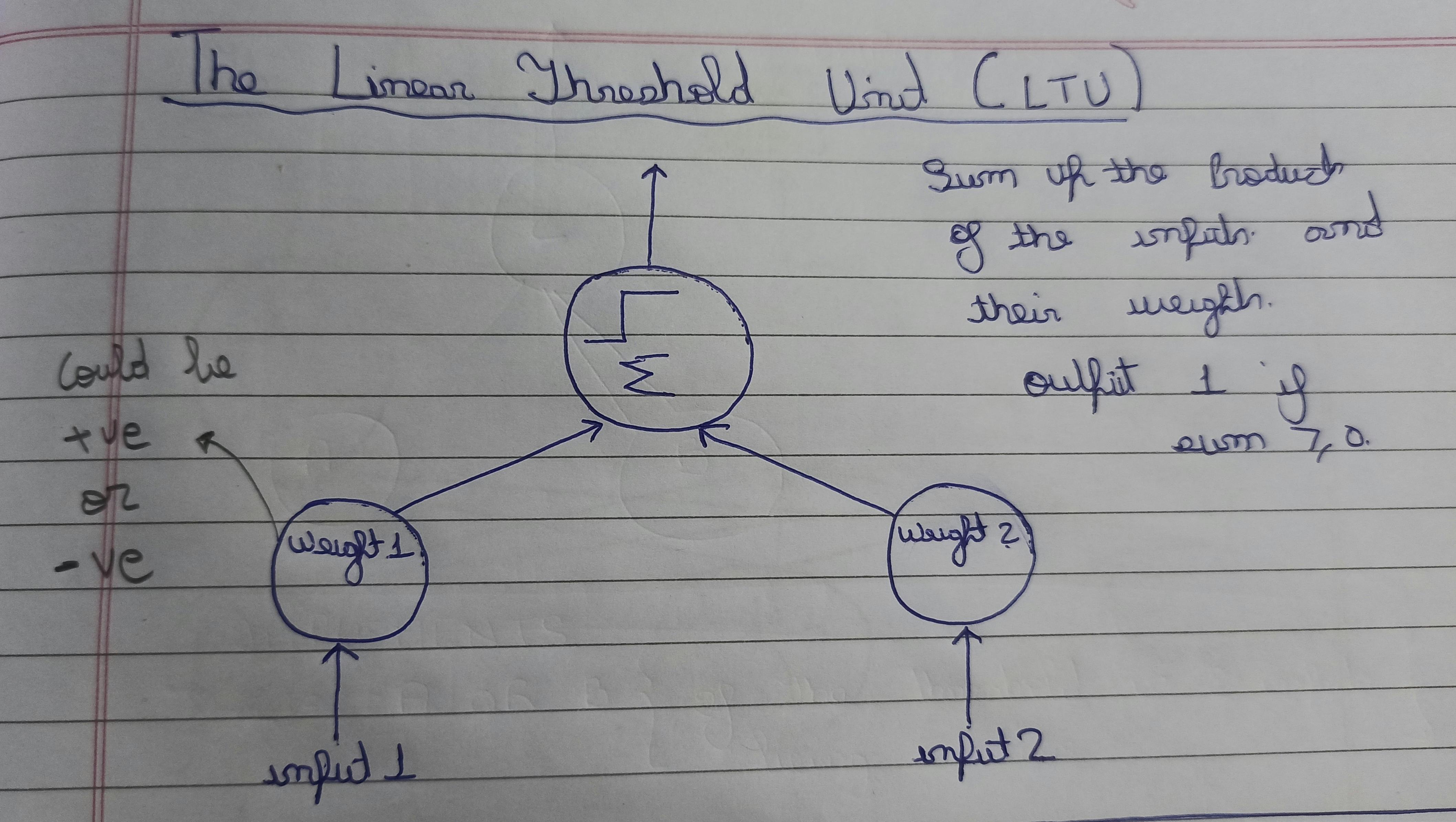

The Linear Threshold Unit(LTU)

In 1957, the simple On and Off Logic was replaced with - Giving weights to each input and Output instead of being a Yes/No with a Step Function. It looked something like this.

[Image 3] - A Linear Threshold Unit(LTU) and its components(Replaced On/Off with Weights in Artificial Neurons).

[Image 3] - A Linear Threshold Unit(LTU) and its components(Replaced On/Off with Weights in Artificial Neurons).

Note, How we are approaching closer and closer to the actual definition of Perceptron which Inturn will define the most basic unit of an Artificial Neural Network.

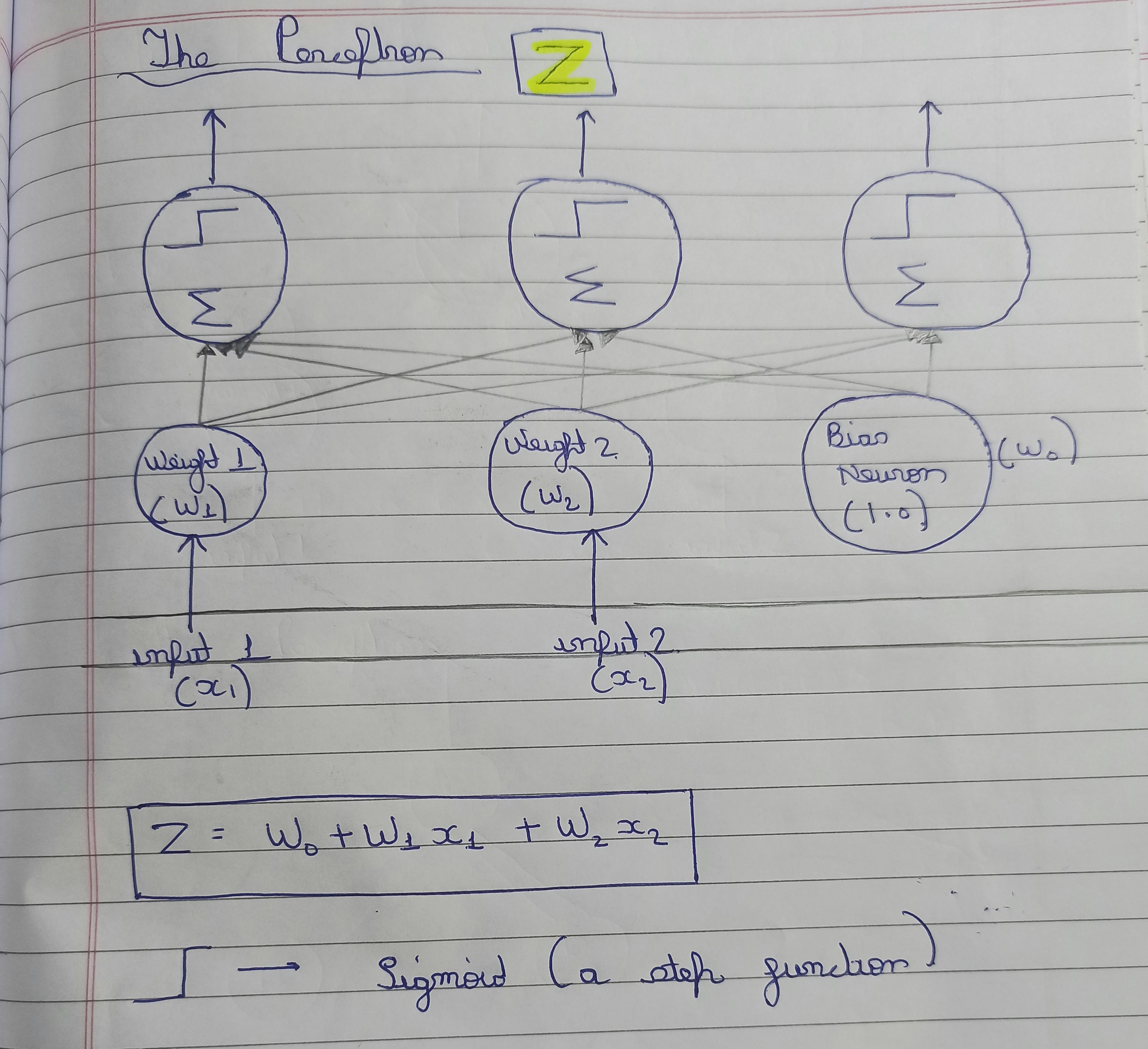

The Perceptron

Now we are starting to get into things that can actually learn.

A Layer of LTU’s.

A Perceptron can learn by Reinforcing weights that lead to correct behaviour during training.

This too has Biological base - “Cells that fire together, wire together”

Weights(Biological Analogy) - Strengths of Connections between neurons

[Image 4] - A Perceptron

EXTRA

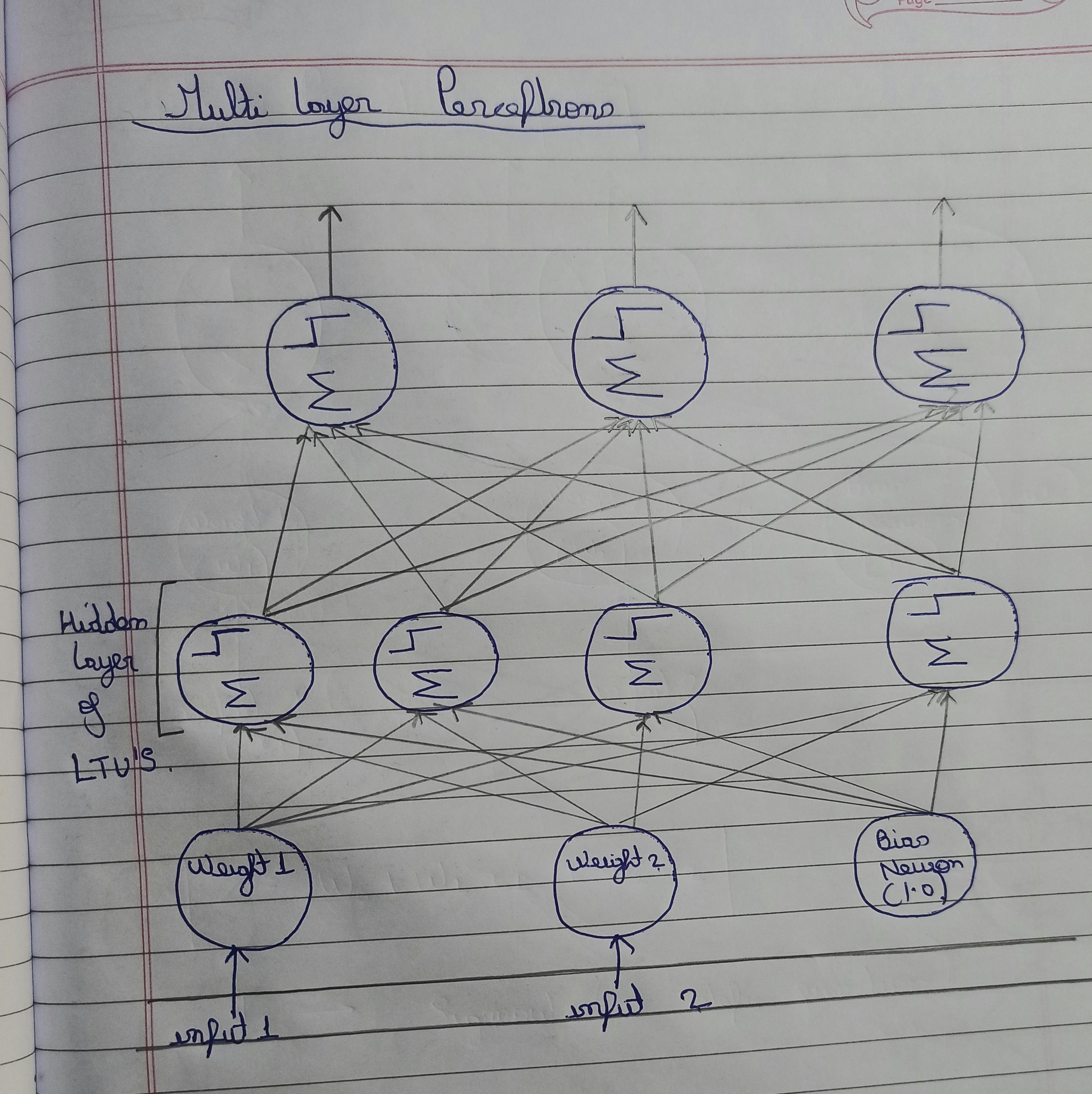

- Multi Layer Perceptron

- A Modern Deep Neural Network

Multi Layer Perceptron - A Deep Neural Network

- Addition of “Hidden Layers”

- This is a Deep Neural Network

- Training them is trickier

- A lot of connections and a lot of opportunities to optimize the weights between each connection

A thing to appreciate here is How we are seeing emergent behaviour here. A LTU is a pretty simple concept, but when you group them into Layers and There are Multiple Layers you can got a lot of complex behaviour.

[Image 5] - Multi-Layer Perceptrons

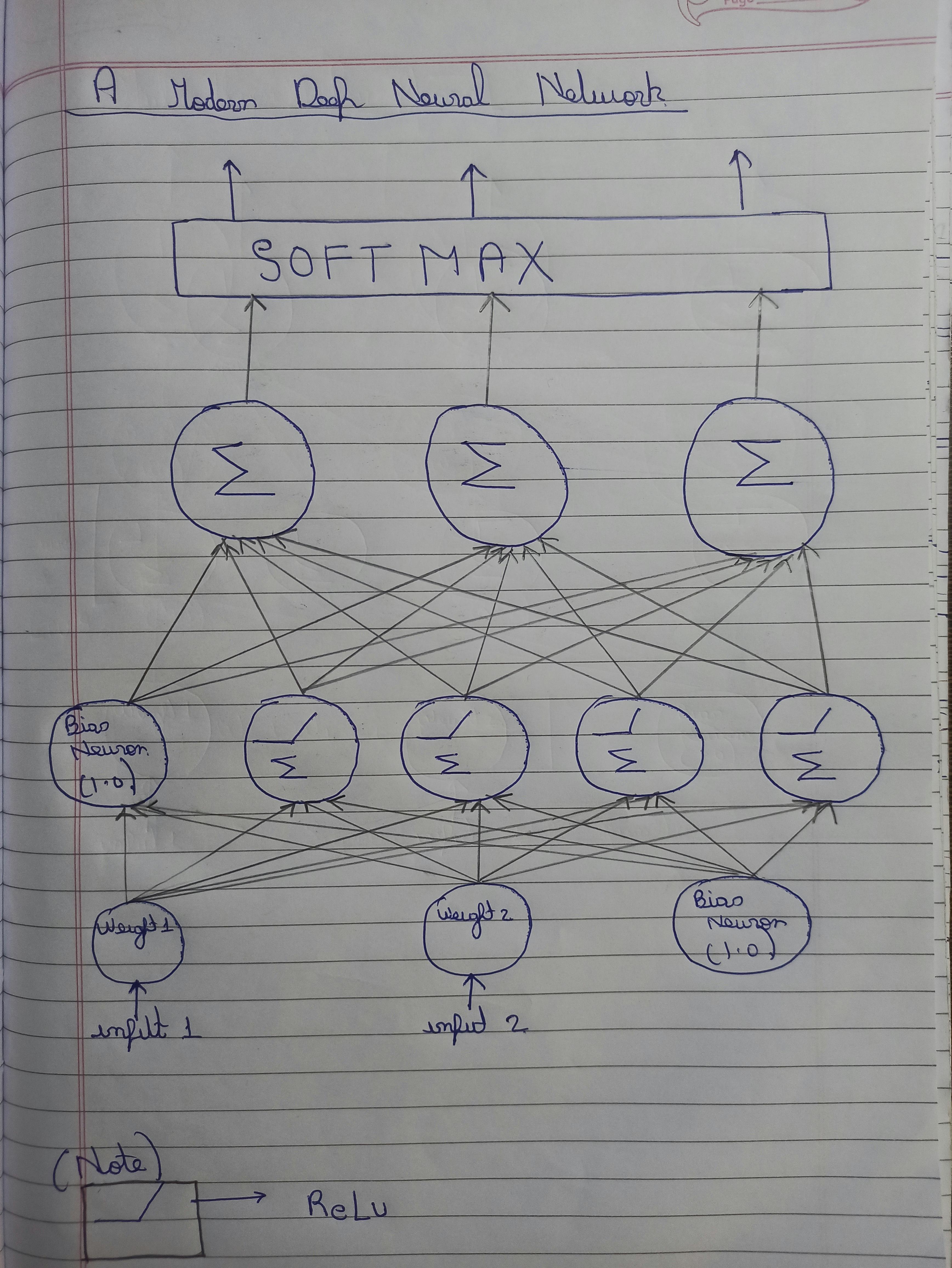

A Modern Deep Neural Network

Replace the Step Activation Function(Sigmoid) with something better(ReLu in our case as it allows for easier slopes and Derivate Calculations also it makes the output converge to the desired goal a little bit quicker)

Apply Softmax to the Output

Training using Gradient Descent or AutoDiff

[Image 6] - A Modern Generalized Deep Neural Network(Hidden Layers of LTU’s + Activation Function(ReLu in our case) + Softmax)